Text 2: Building a GPU Text Editor

Milestone 55: ConclusionAs of: 2024-08-06

The previous exploration, Text 1: Rendering and Editing used a simple pixel buffer for rendering. The primary goal of this exploration is to build a simple GPU rendered, monospaced text editor and learn graphics programming. It's in reverse chronological order.

55: Conclusion

This is the best stopping point for this exploration. Getting here has provided me with hands-on experience in shader programming, UV image mapping, texture rendering, orthographic projection, camera movement, texture atlases, occlusion culling, geometry instancing, font rendering, text manipulation, simple file management, and building a command interface. Each item was discovered at the speed of need. In other words, when looking at my list of requirements and expectations, I chose a balance between the perceived difficulty and logical progression. That said, I didn’t spend too much time overthinking it. The process was all about discovery and finding answers to questions.

You may be wondering if I wrote tests along the way. I didn’t. Tests are for solidified code meant for customers. However, notes, comments, and simplicity then became essential in this recreational project. I can’t imagine how challenging this would have been if I also had to redo tests based on false assumptions and decisions made from ignorance. If I were to build a product now, I would write tests with the lessons learned from this experience in mind.

Thanks

54: Create, Read, Update, Delete

Notes

- The application restarts when there is a change to the directory.

- The

deletecommand requires the full text and the file name to execute. It's not a permanent feature. In a larger application, I'll move the file to the operating system's trash and have the user perform deletion.

53: Open Command

Notes

- Make It Work.

Make It Right.Make It Fast.

52: Quit Command

Notes

- The one thing I don't like about Helix is that the commnad line does not support modes with the

HandLkeys for cursor navigation. It completely disrupts my flow. - Pressing

:while in normal mode, activates the command line in insert mode. PressingESCreturns it to normal mode and pressingESCagain will return you to the document. - There is no need to return the normal mode to execute a command.

- Why Humans Avoid War by Space Paladin. (Lorem Ipsum stops being fun after a while).

51: Character Deletion III

50: Character Insertion III

Notes

- The tricky part is ensuring that the cursor moves to the correct line when the word it rests on should be on the next line. This required a complete rewrite of how the application displays text. Naively rendering based on the cursor's position on the screen was not correct, but it wasn’t in vain. Instead, the application now keeps track of the character index that should be in the top left corner and expands from there.

- More backtracking is required to fix scrolling and cursor movement.

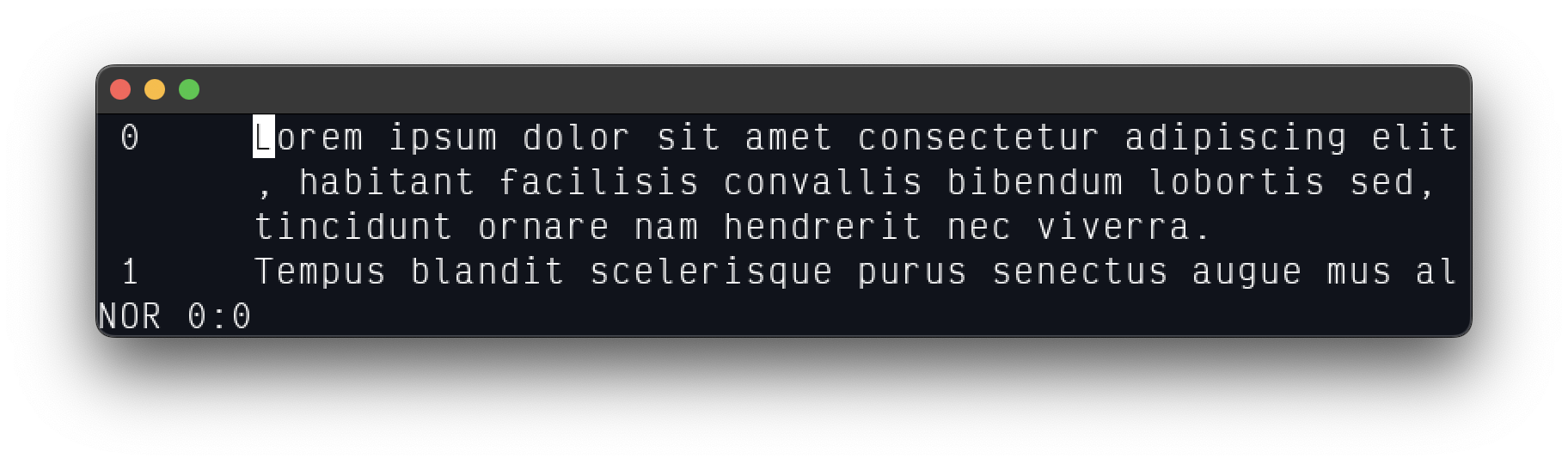

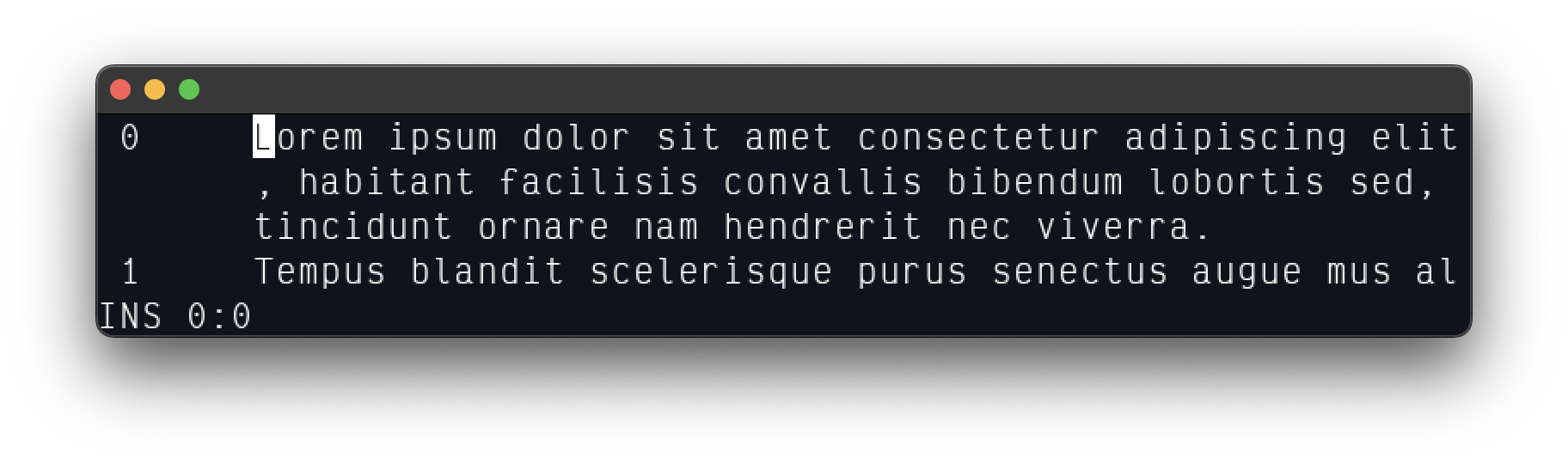

49: Status Line

Normal Mode

Insert Mode

48: Jump to Start and End and Drag From Cursor

Notes

- Under the hood there exists a modal system.

- To jump to the start of the file, press

ginmajor_normal_modeto set theminor_modetogoto, thengagain to execute thegoto_file_start()command. - To execute

goto_last_line(), pressgewhile also inmajor_normal_mode. - To drag the view of the file, simply hold the shift key and press

JorK. Helix allows for this with the following configuration.

[keys.normal]

S-j = "scroll_up"

S-k = "scroll_down"

# ...

47: Cursor Movement III

Notes

- H J K L for movement.

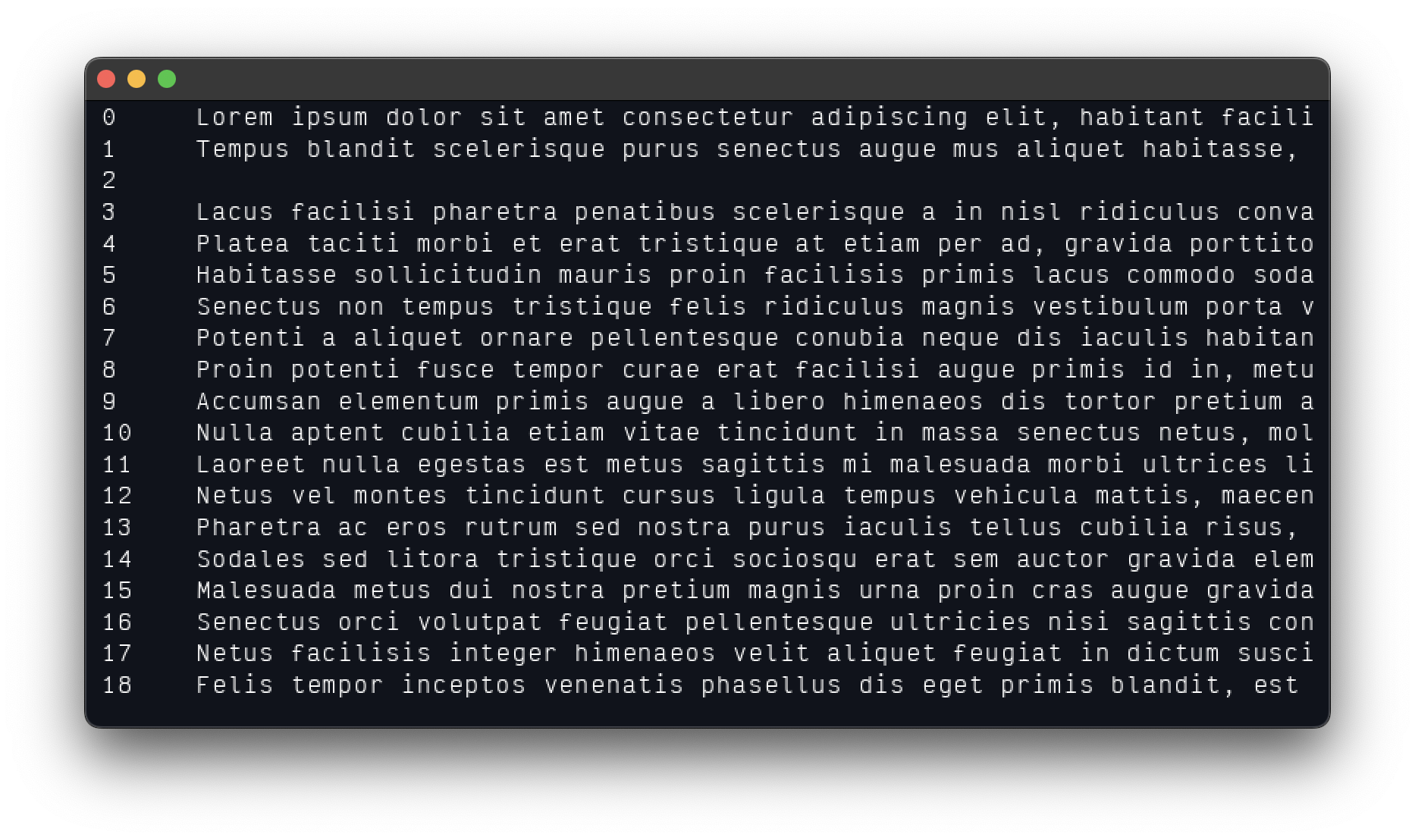

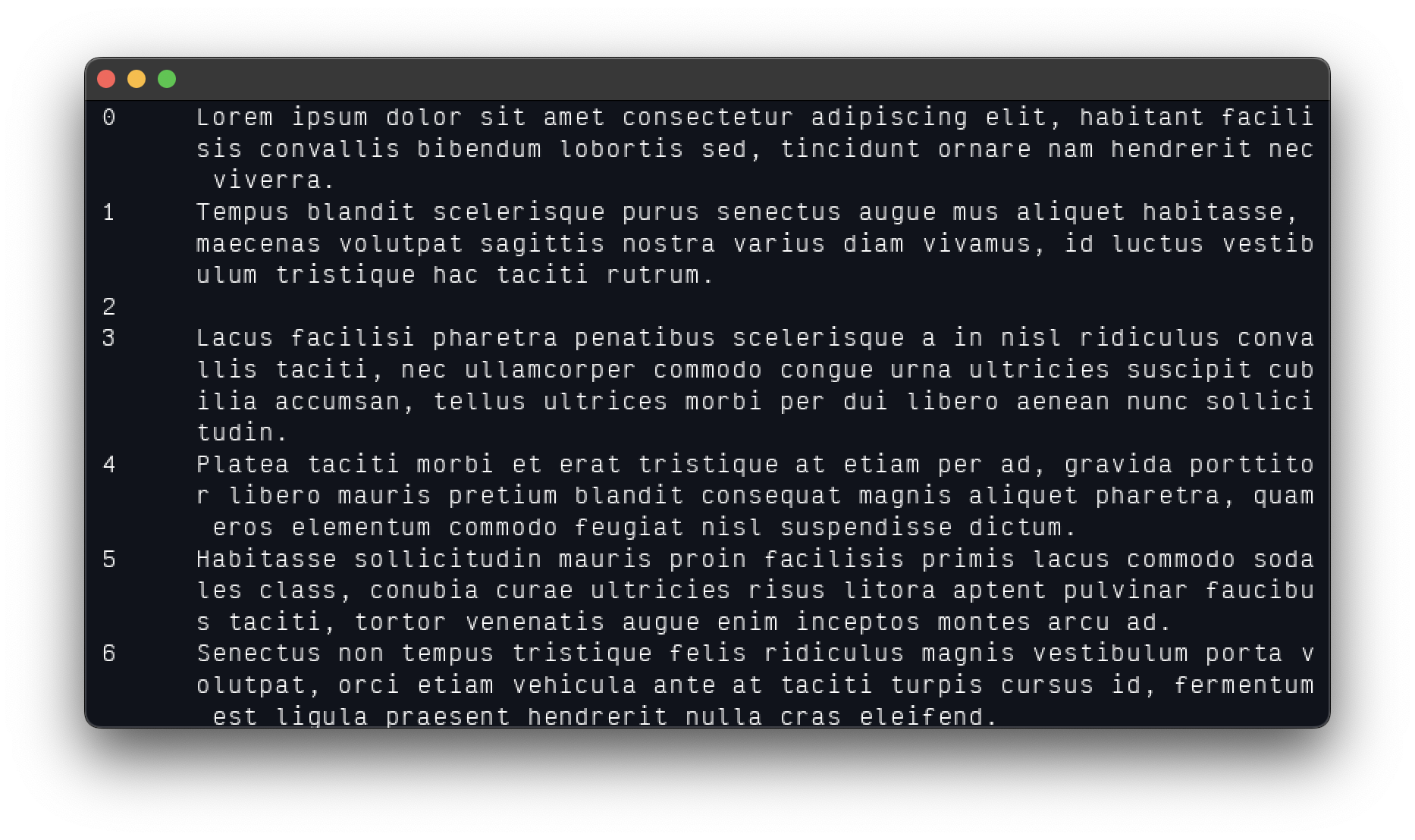

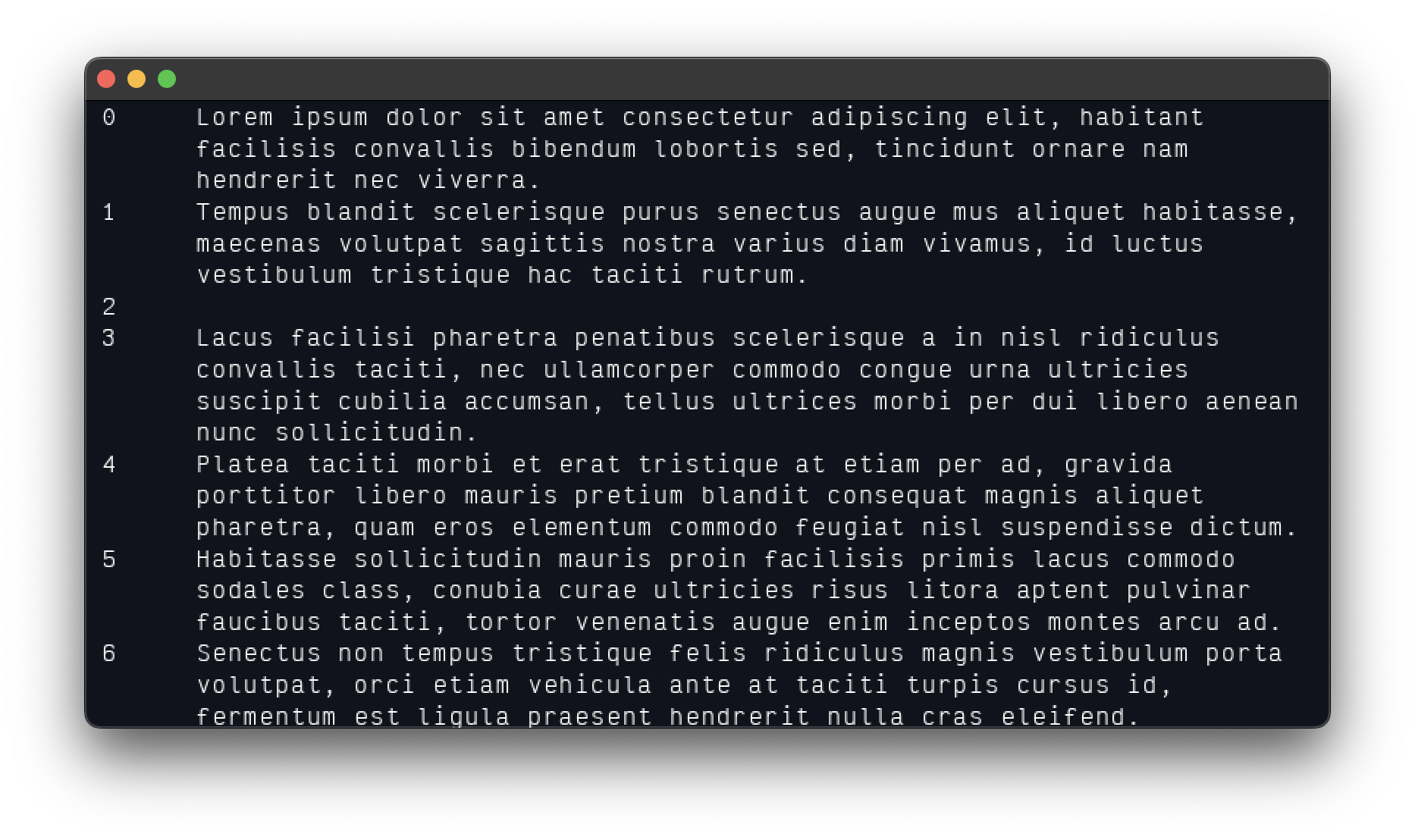

46: Line Numbers

Unwrapped

Line Wrapped

Word Wrapped

Notes

- The gap is large because the file contains 59,000 lines and there must be a single cell gap between the numbers and the text.

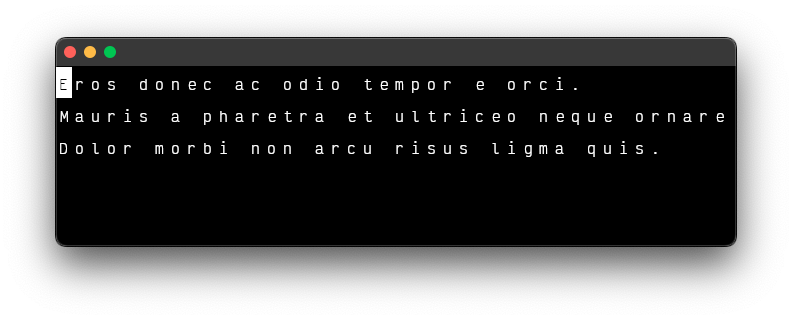

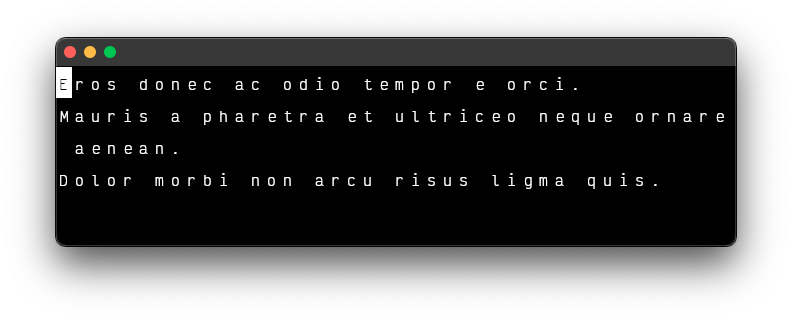

45: Word Wrap II

Notes

- The word wrap algorithm found through a search engine does not account for multiple spaces between a words.

44: Line Wrap II

43: Drawing and Moving Cursor II

Notes

- Reimplementation of milestones 24 through 30, which cover cursor movement. Horizontal and vertical movement by character and by word. The camera follows the cursor by checking for non-collision with the camera's padded bounds using axis-aligned bounding boxes (AABB) and translates the camera by the off-distance.

- When dealing with unwrapped lines, it's important to keep a record of the cursor's initial column while navigating vertically and to clear the record once the user returns to horizontal movement. This simply to align my expectations with other text editors.

- As a bonus feature,

ALT+HOMEmoves the cursor to the first character,ALT+ENDmoves it to the last.HOMEandENDmoves the camera to the start and end of the document, respectively. - Reduced the atlas block list to a single block by rendering specific characters rather than the entire character map.

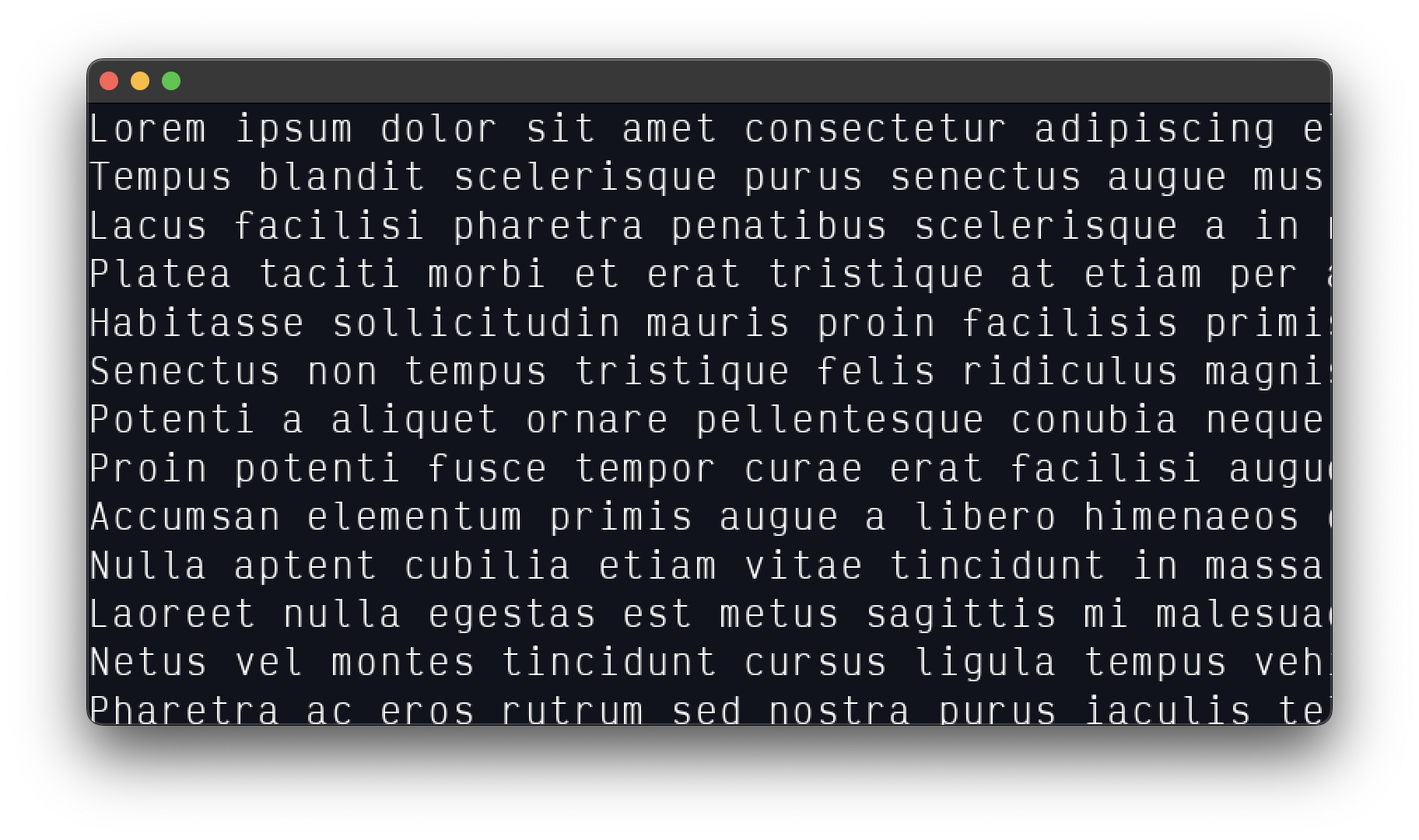

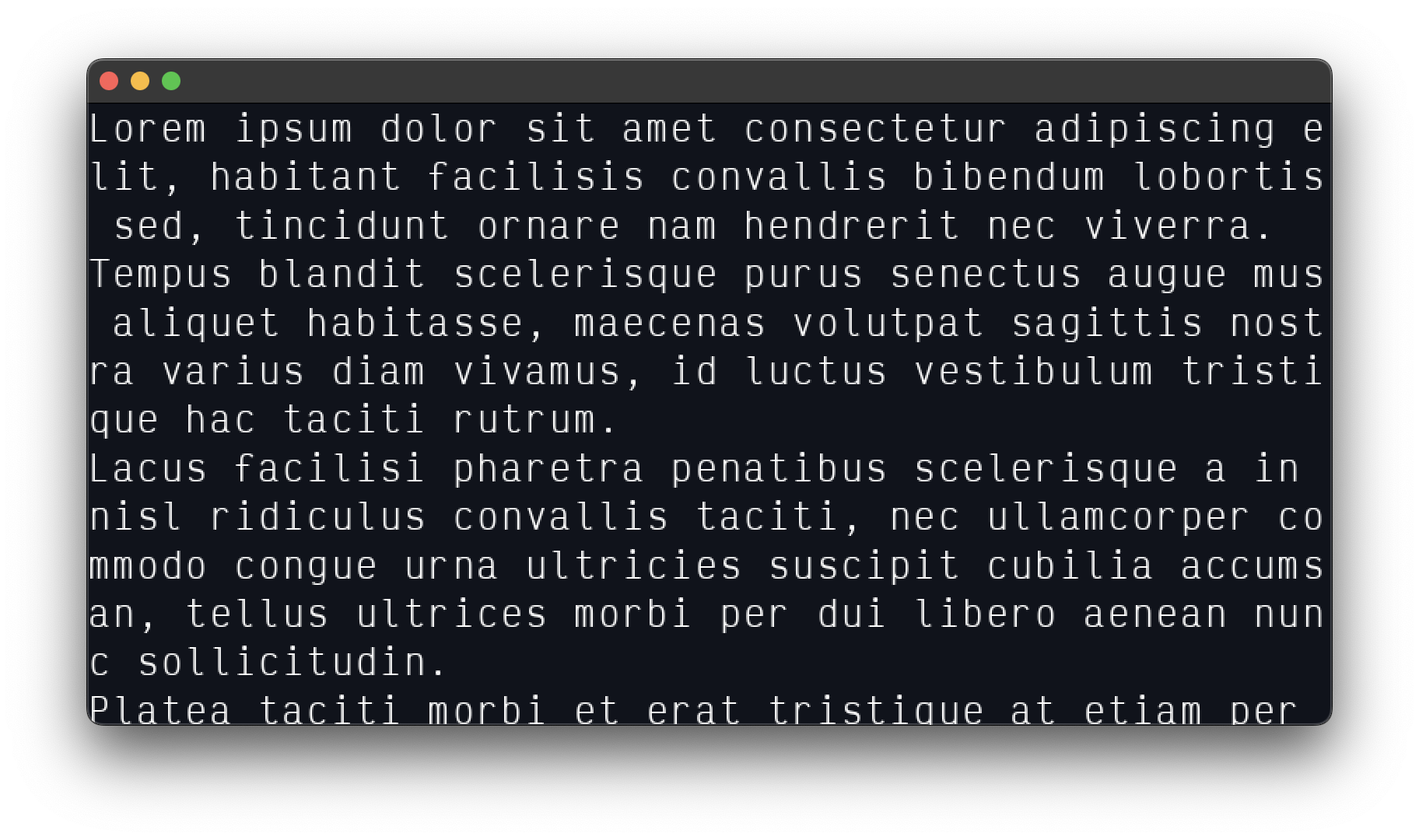

42: Scrolling Through a 10 MB File.

Notes

- A 10MB file with 56,900 lines loads near instantly.

- The file is generated with

getlorem --units bytes --count 10000000 --swl > 10MB.txt, but it produces a single line so be sure to select all of the sentence endings (\.) and replace them with new lines (\.\n).

41. Vertical Scrolling.

Notes

- "No plan survives first contact with the enemy."

- It turns out, there was no need to append the columns and rows to ensure smooth scrolling. Simply adding 2 to the

col_countandrow_countis just enough to allow glyphs to peek into visibility. This is because based on the camera's distance from 0, I can offset which rows and columns to extract from the text. To clarify, if the camera is moves the distance of a cell along the y axis, then therow_offsetis set to 1. Then, all that is left is to iterate from 1 to the number of visible columns or the last line index of the file. This should work for horizontal scrolling as well.

40. Text Area

Notes

- Simply render to a texture and then render the texture to the screen.

- This setup requires 2 cameras. One for the application window and another for the text area pane.

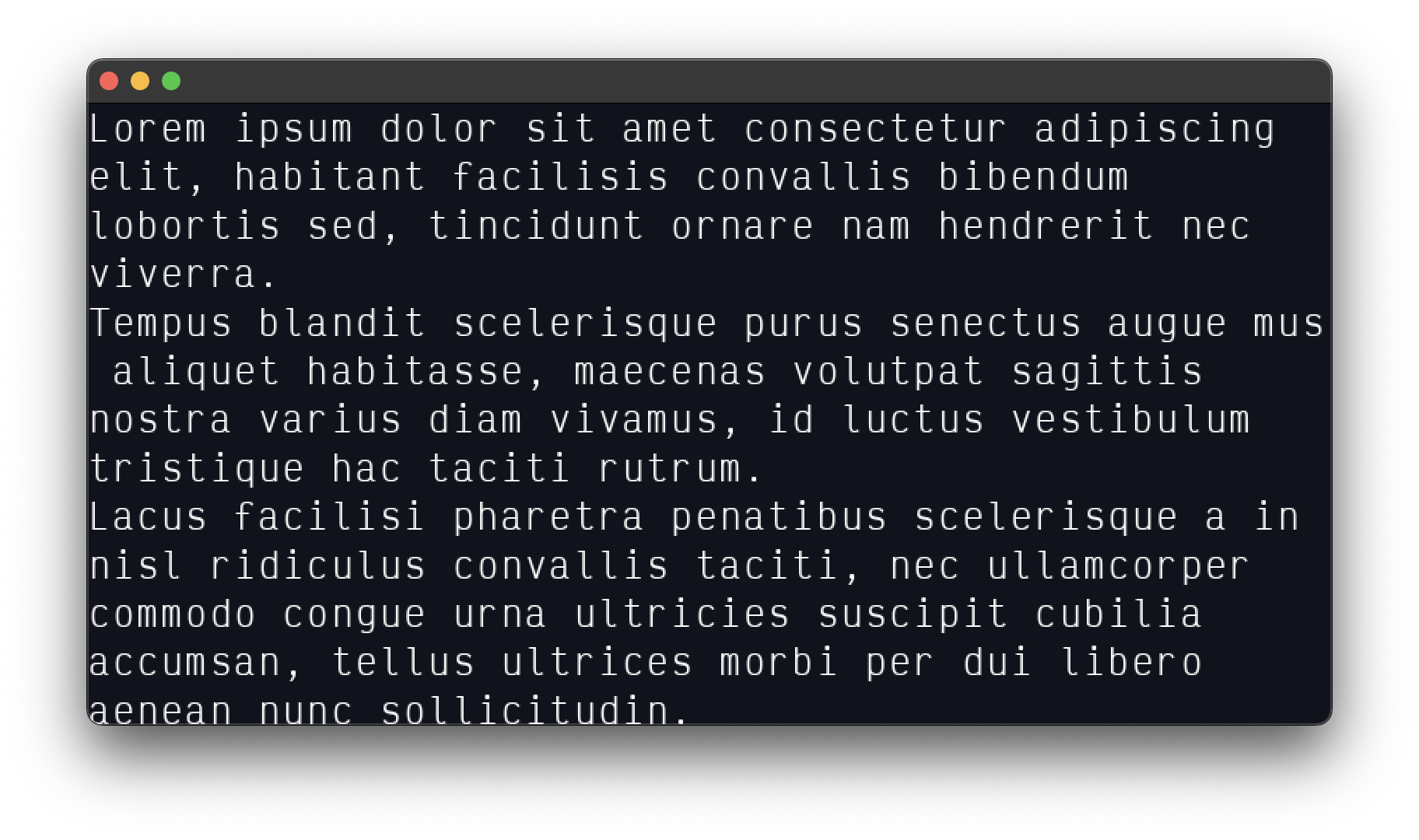

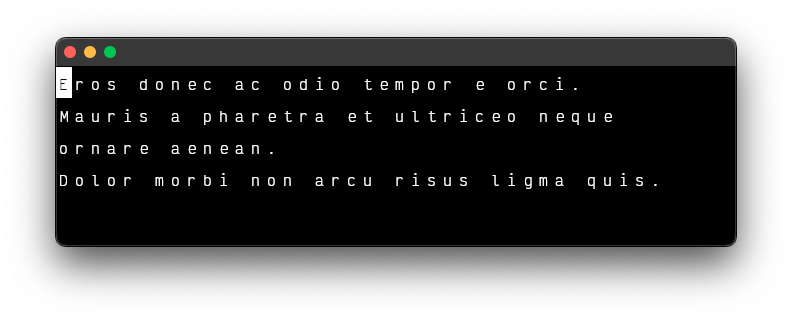

39. Rendering a Story

Notes

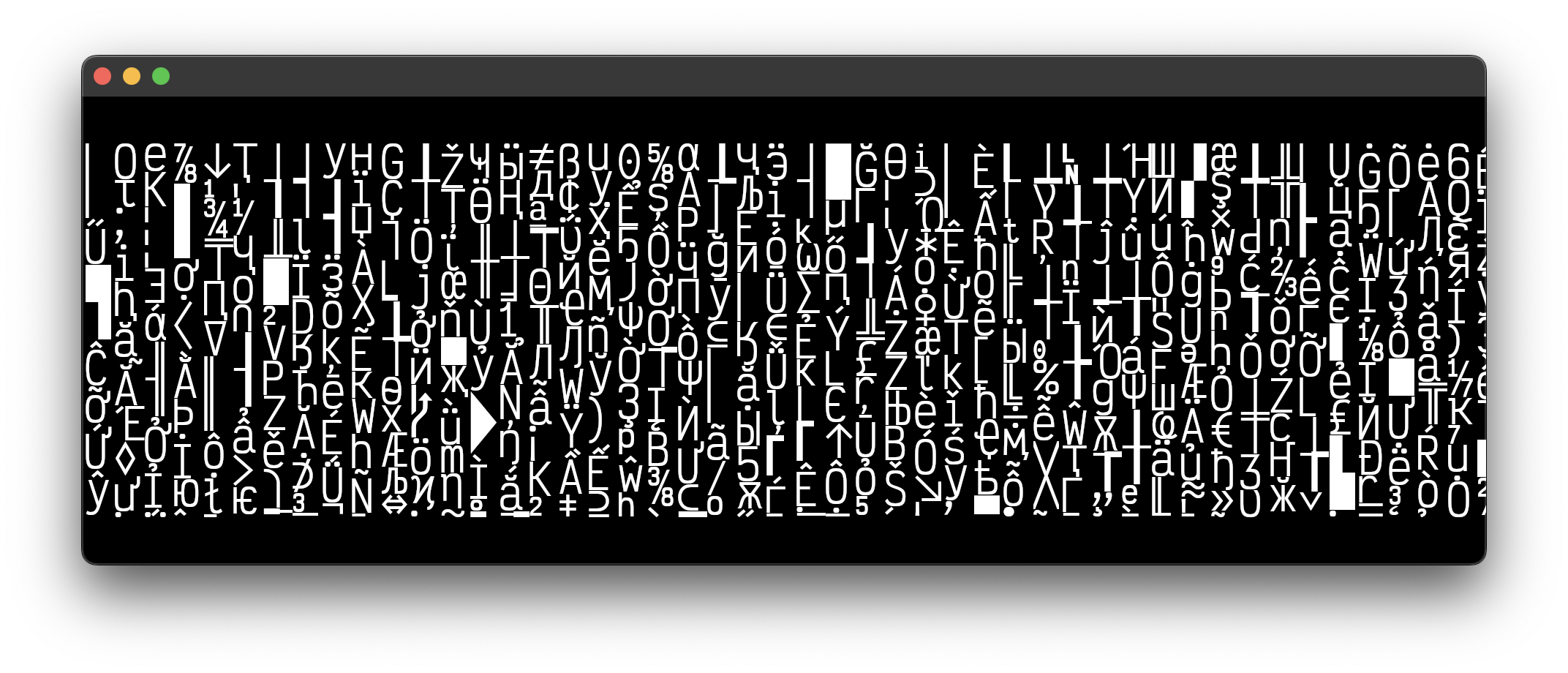

- Instancing the glyphs requires the use of a texture array. The instanced object needs the glyph's scale, position, the image index within the atlas list, and UV coordinates. Resizing the window reveals more or less the story along both the x and y axes depending on the dimentions of the window. It is not the result of culling, but a change to the instance data. Growing and shrinking as neeeded. I'll have to keep an eye on this implementation's performance once I get to rendering multiple documents.

- The video display's the first few unwrapped of Why Humans Avoid War by Space Paladin. (Lorem Ipsum stops being fun after a while).

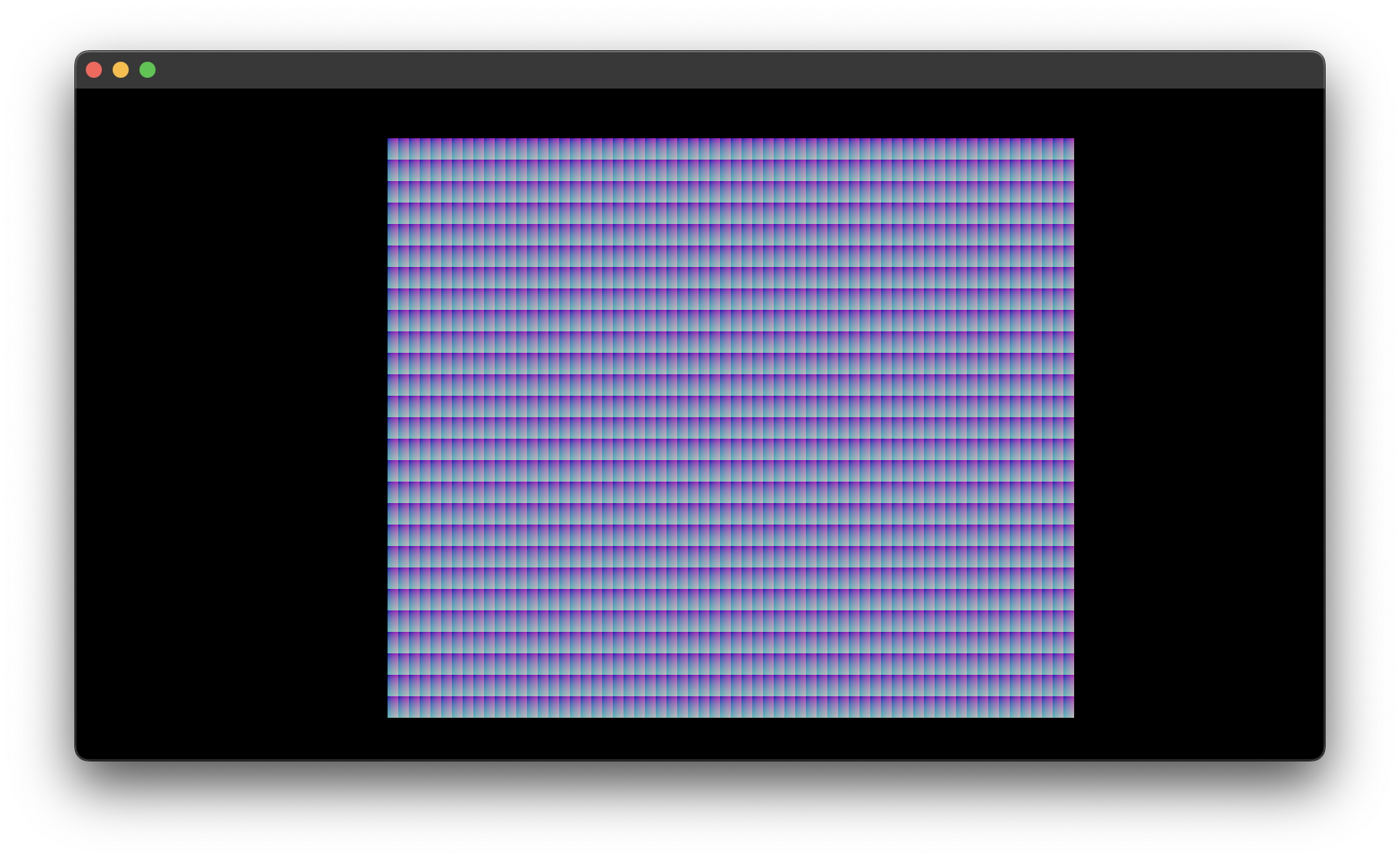

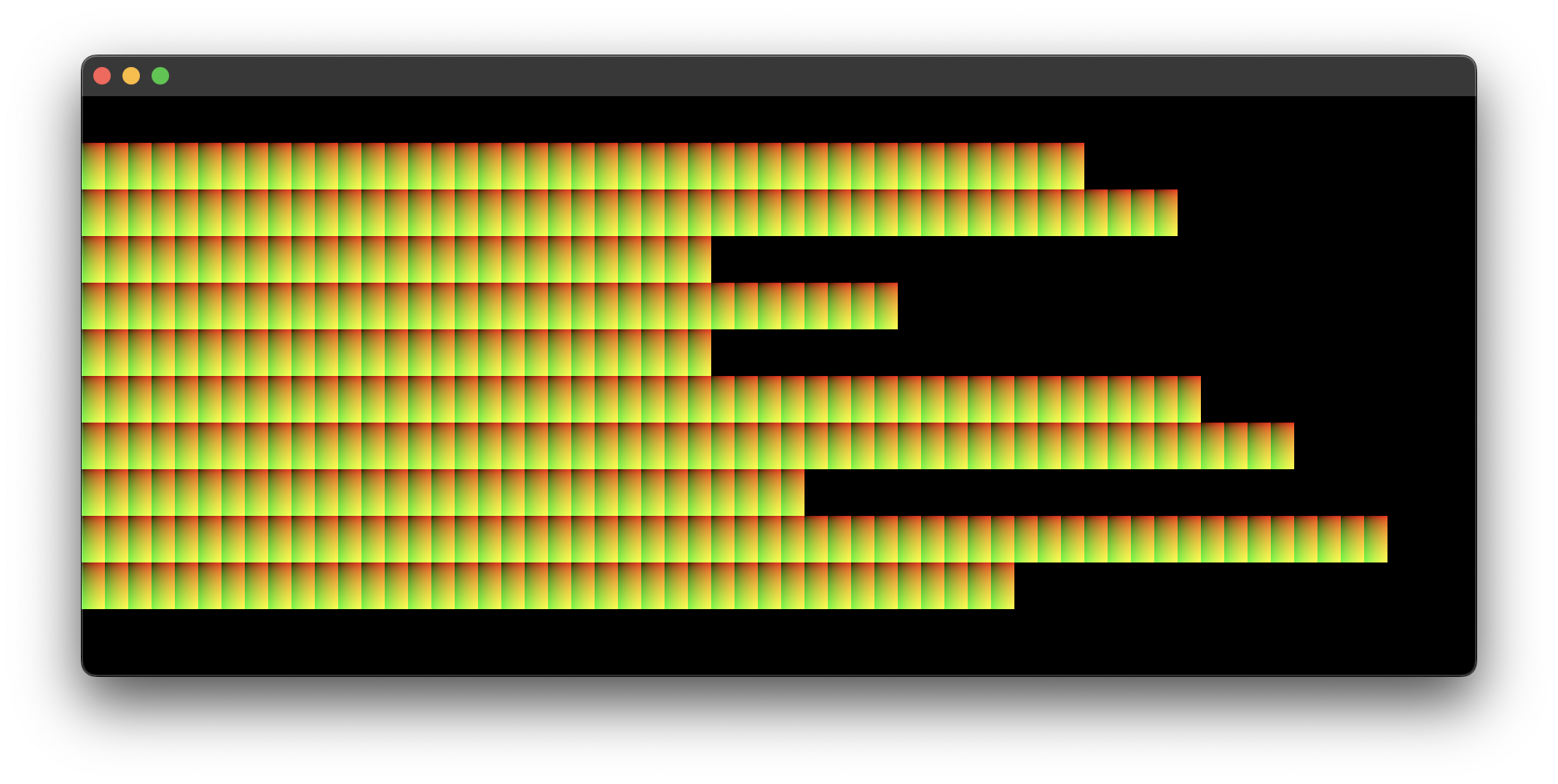

38. Cell Instancing

Notes

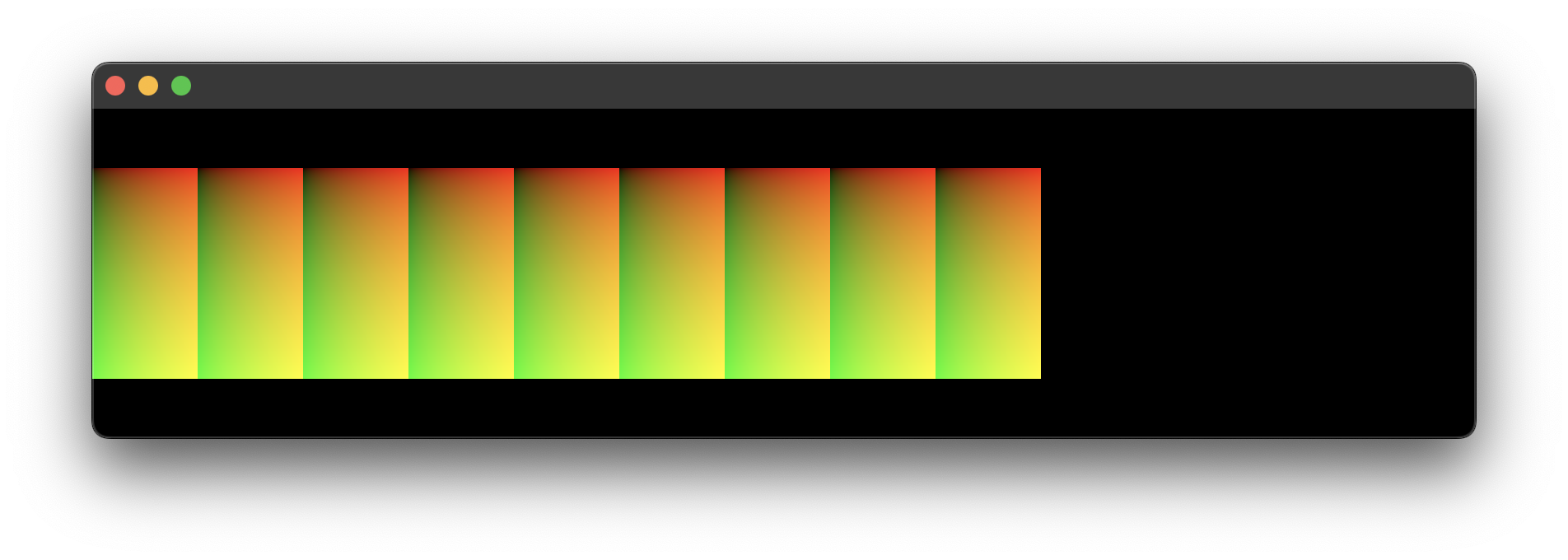

The problem was that each cell had its own buffer and requires that I iterate through every one of them for re-positioning when the window is resized. Before delving into threads, polling, and also recalling Casey Muratori's Lectures on Optimization, I decided to see how far I could go without increasing the system's complexity. After all, computers today are orders of magnitude faster than what was available in the days of the VT100, so the issue must have been with my implementation.

While learning about instancing, I discovered that there is no need to send the entire 4 by 4 transformation matrix to the GPU. These debug cells only require the x and y scales and translations. So I opted to send just these four values over and build the matrix within the shader. As a test, I then lowered the cell size to 8 by 16 units and, to my surprise, discovered that the application was able to transition from rendering 100 cells to over 60,000 without stuttering.

37. Rendering the Grid. Again.

Notes

That was easy, but it's a bit slow. Up until this point the application rendered large cells which hid the performance problem that occurs when the cells are set to a reasonable size. What's the deal? It's just rendering UV Quads. Along the way, I finally got around to maintaining a consistent cell size across screen DPIs. As mentioned before, macOS screens have twice the DPI of standard monitors. So a box width of 40 units will appear to be 20 units.

Intermission II

- Render a single cursor.

- Insert characters at the cursor's position within the text.

- Move the cursor by character.

- Move the cursor by the next and previous word.

- Insert new lines at will.

- Toggle line-wrapping.

- Reposition the glyphs based on the window's width while maintaining the camera's view of the cursor.

- Create a text file.

- Edit files in memory.

- Save files to disk.

Houston, we have a problem

While I've learned a great deal to get to this point, I realize that I made a wrong turn back at step 21. The problem lies with the LineLattice. Every line of the document is created and placed into the world even though only a fraction of the lines will ever be visible at once. I should have trusted my instincts and gone with a row count that is dependent on the height of the viewport. With that, I should be able to add extra rows on both the top and bottom of the grid as a buffer to allow for smooth scrolling. Once an off-screen line enters the viewport, I'll then take the furthest row from the opposite side and append it to the side of the scroll direction.

To clarify, let's say the screen supports 20 visible rows. The plan is to append and prepend, for example, 5 to each end, bringing the total row count to 30. 10 rows will be culled from rendering. Scrolling down will cause 1 buffered bottom row to become visible. At which point, I'll take the topmost row and position it at the bottom. The same can be done with the columns.

They say a good night's rest is the best debugger. Searching for "NES Tilemaps" on YouTube led me to this video. Illustrating what I'm trying to achieve. The Nintendo Entertainment System's Loading Seam - Retro Game Mechanics Explained

36: Unwrapped Text, Line Wrapped, and Word Wrapped

35: Line Wrapping

34: Implementing Carriage Return Line Feed

33: Deleting Characters at the Cursor's Position.

32: Inserting Characters at the Cursor's Position.

31: Cursor Movement Across Words.

30. Following the Cursor on the Vertical Axis.

29. Vertical Cursor Movement.

28. Following the Cursor Through to the End and Back.

27. Horizonal Cursor Movement Across Lines.

26. Horizontal Cursor Movement.

25. Culling Debugging.

24. Basic Cursor Movement.

notes

- It's important to keep track of the cursor's last position to return a glyph's color back to an undistinguished state.

23. Drawing a Cursor.

notes

- There are two ways to draw the cursor. Either draw a transparent box under the glyphs or draw a fully opaque box and update the glyph's color in the shader.

22. The Culling.

21. Rendering Unwrapped Lines From a File.

notes

- The grid was not the best place to start. It led me to be far too conerned with the final result instead of the model. While trying to render a single line, I discoved that I could simply create the additonal lines needed and group them into a

LineLattice. This should make line-wrapping, culling, and possibly rendering line numbers much easier.

20. Lorem.txt

The project now contains a file named lorem.txt. It has seven paragraphs across thirteen lines, with the longest line containing 1,916 characters. The file uses 9 KB. If the grid were set to have a column count of 1,916 and a row count of thirteen, it would contain 24,908 cells. That would use far too much memory for off-screen characters. If I needed to use a 1 MB file, the cell count for unwrapped lines would be 1,277,783.

The cells have a 2:1 ratio, where the height is twice the size of the width. Their dimensions are used to determine the number of cells that can fit on the screen, as shown in sections 13 (Cell Column Count) and 16 (Line Breaking/Word Wrapping).

However, this speculative little calculation is a distraction. The next step is to render a single unwrapped line of the file. The lingering questions from the previous section are not yet relevant.

Intermission

I've had to back track and update my assumptions about text editors. For example, in section 14, I assumed that I'd always know how many cells I'd need based on the length of the body of text. That proved to be false once I reached the point of line wrapping; where I'd have to skip cells and move to a new line to avoid breaking a word across lines.

I'm sure I'll have to backtrack more as the project grows in complexity, so now is a good time to take a break, review the lessons learned, and try to think a few steps ahead.

I recently earned an ITILv4 Certification. Rather than brain dump (forget it until I'm questioned on the matter) the material in preparation for the next course; I've decided to try to put it to good use using the 4 of the 7 steps of their Continual Improvement Model.

1. What is the vision?

The editor should be able to do the following:

- Render a single cursor.

- Insert characters at the cursor's position within the text.

- Move the cursor by character.

- Move the cursor by the next and previous word.

- Insert new lines at will.

- Toggle line-wrapping.

- Reposition the glyphs based on the window's width while maintaining the camera's view of the cursor.

- Create text file.

- View 1 or more text files.

- Edit files in memory.

- Save files to disk.

The end result will be a toy. Its purpose is to provide the requisite XP for more complex projects.

2. Where are we now?

We currently have:

- An infinite virtual space.

- A resizable grid.

- A sprite/glyph atlas for character glyphs.

- A movable orthographic camera.

- A mechanism to insert and delete characters.

- A mechanism to render characters on the grid.

3. Where do we want to be?

The proper next step is to render an essay. So far I can only render a sentence. The demos don't show the ability to render a new line if the text string has a new-line character (\n).

4. How do we get there?

- Place a large text file in project directory.

- Determine the window of how many bytes to read at once to avoid loading the entire file into memory.

- Decode the bytes into a string.

- Render the text, line by line, without wrapping.

- Build a way to use the mouse wheel to move the byte window through the file and re-render.

- Build a way to scroll while holding the shift key to move the camera on the horizontal axis.

Lingering Questions

- How do I ensure the byte window size is large enough to fill the screen and reach the vertical culling zones?

- Do I scan an initial section, compare the line count of the decoded text with the grid's row count, then enlarge the window if there aren't enough? If so, I should be able to scan from the end of the byte window to the next new line byte pattern.

There's only one way to find out.

19. Updating Characters

18. Input

Notes

- The greedy line wrappig algorithm is more appropriate for an input area.

17. Scrolling

Notes

- Move the camera, not the text.

- Don't forget to cull the off-screen cells and glyphs.

16. Line Breaking/Word Wrapping.

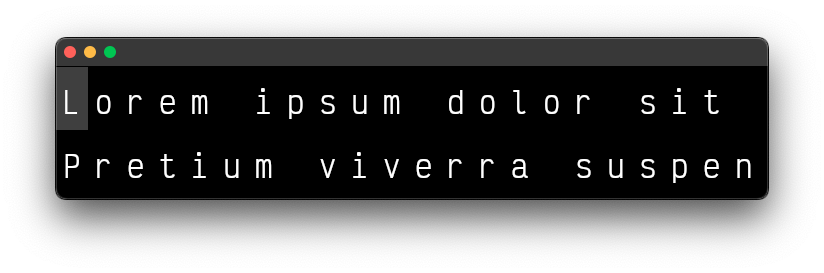

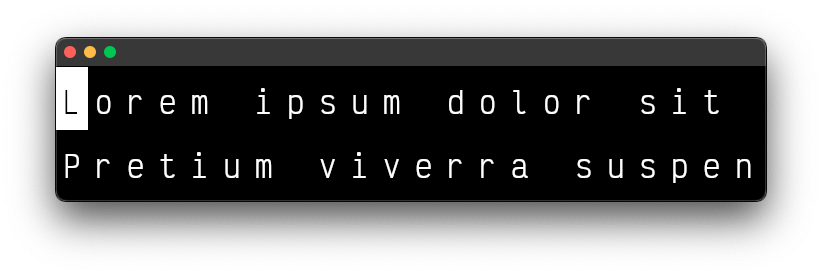

15. Lorem Ipsum

Notes

- "Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua."

- A word should begin on a new row if the current row does not fully support it.'

14. Cell Grid

13. Cell Column Count

12. Cell Row and Glyph Position

11. Character Deletion

10. Character Input

9. Hello World

Notes

The font is VictorMono-Regular.

8. Render a Single Glyph

Notes

Rendering a single glyph requires simply selecting the correct block index, UV coordinates, and transforming the quad to the dimensions of the image.

Since I have 1x and 2x pixel density monitors, I'll have to generate another atlas with a doubled font size for moving windows across them. Resizing the camera's viewport does not yield crisp results.

Supporting multiple font sizes will require additional atlases. As you can imagine, the memory costs would outweigh the benefits; which could explain why the elder_devs found other means to render text, even if they limited the glyph pallet.

Side Note

An elder_dev is someone who cut through the forest of tough software and hardware problems of the past. They laid the foundation of what we build upon

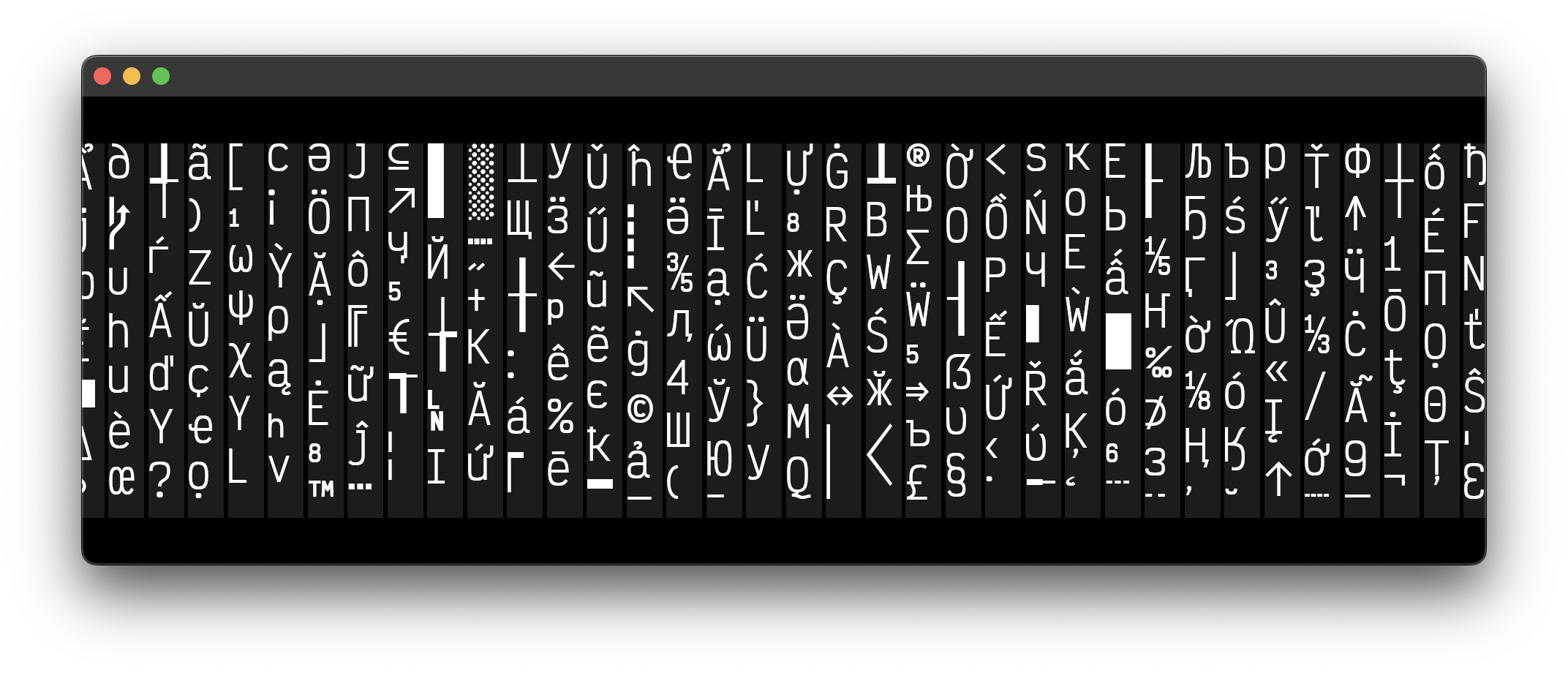

7. Glyph Atlas

Notes

Text rendering with a GPU is a bit of a rabbit hole. Among single and multichannel signed distance fields, curve tessellation, and Bézier curve outlines in the shader, the most direct approach is to use an atlas.

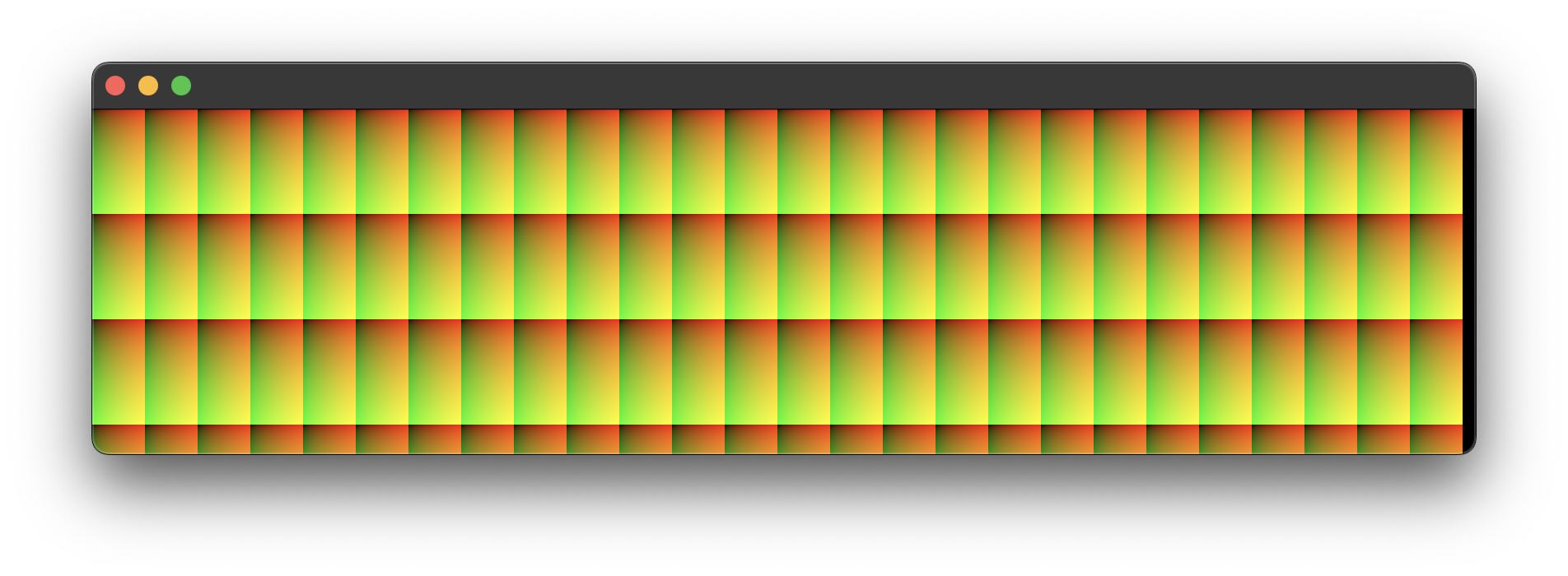

This glyph atlas is composed of the rendered images for each glyph available in the font file. Since the total number of glyphs available can vary between fonts, it seems best to generate the atlas as several vertical slices as needed. For the sake of the screenshot, the height of each block is 512 which generates 108 blocks for the font size of 32. A larger block size will require fewer blocks.

The implementation is simple. Place the glyph in the block with the available space and remember its location. There is a one pixel gap between the glyphs and each block has its own transformation for debugging purposes.

Game engines would typically use a texture packer; which finds the optimal configuration to save on space. They may also choose to select only the most common characters, depending on factors like distribution region and scope.

6. Orthographic Camera

320 × 320

480 × 320

Notes

The use of an orthographic camera resolves the distortion issues. The window can be resized and moved across screens of differing pixel densities while maintaining the image's display size.

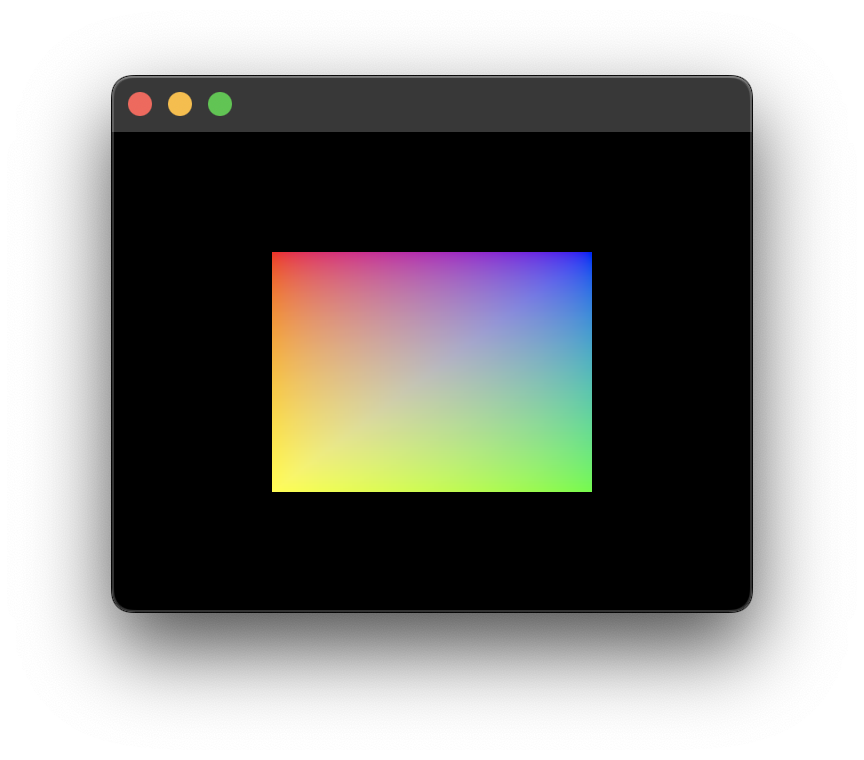

5. Render Image

320 × 240

320 × 320

Notes

The source image is 256 by 256 scaled by 0.5 to 128 × 128. Its distortion in the first screenshot is due to the proper mapping of its edges to the UV coordinates of the quad. Additionally. The vertices are still hard coded to the normalized device coordinate (NDC) system, which allows the image to appear undistorted if the window's width and height were equal, as shown in the second screenshot.

4. UV Coordinates Quad

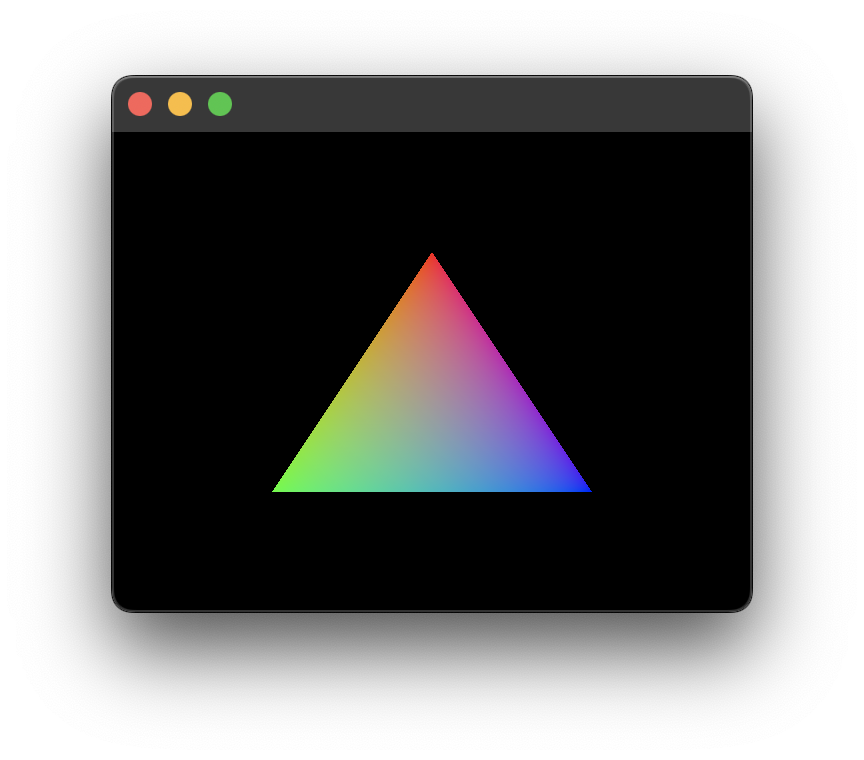

3. Hello Quad

2. Hello Triangle

1. Initial Clear Color Render

1.0 DPI

2.0 DPI

Notes

Apple displays have twice the pixel density of standard displays. Opening the window on a standard display and moving the window to the apple display will cause the image to appear smaller due to the doubling of the available pixels in the window. The solution is simple. When the scale factor changes, adjust the dimensions of the render surface just as you would with a resized window.

0. Basic Window